Abstract

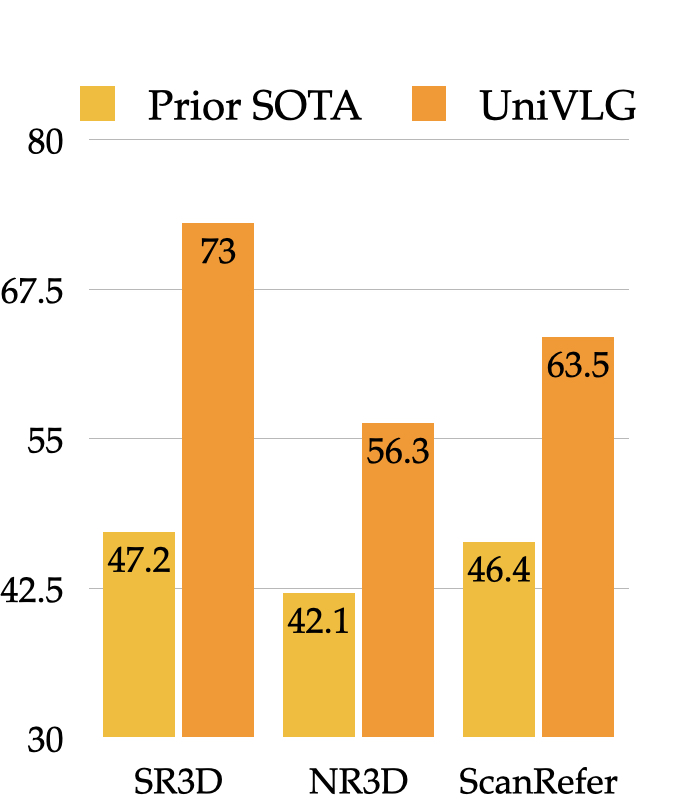

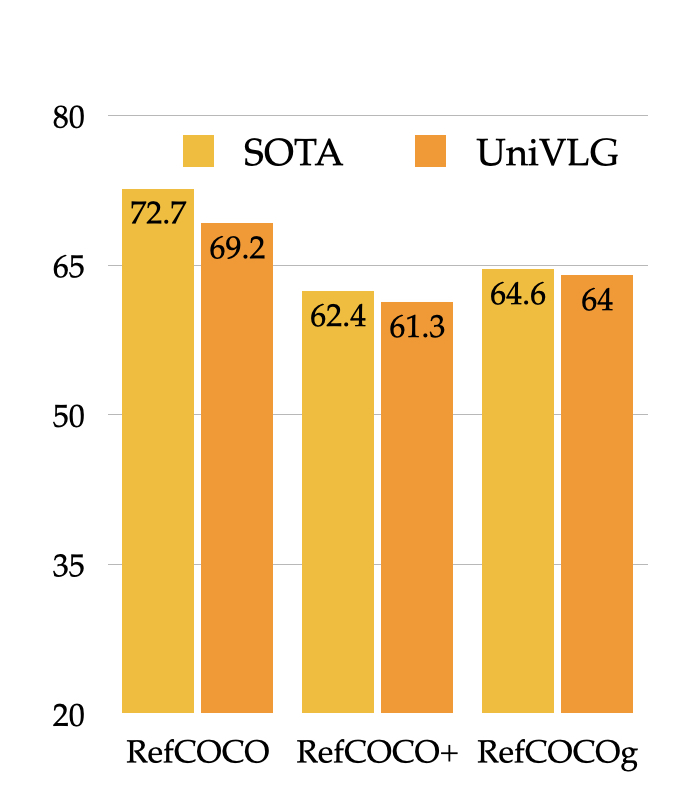

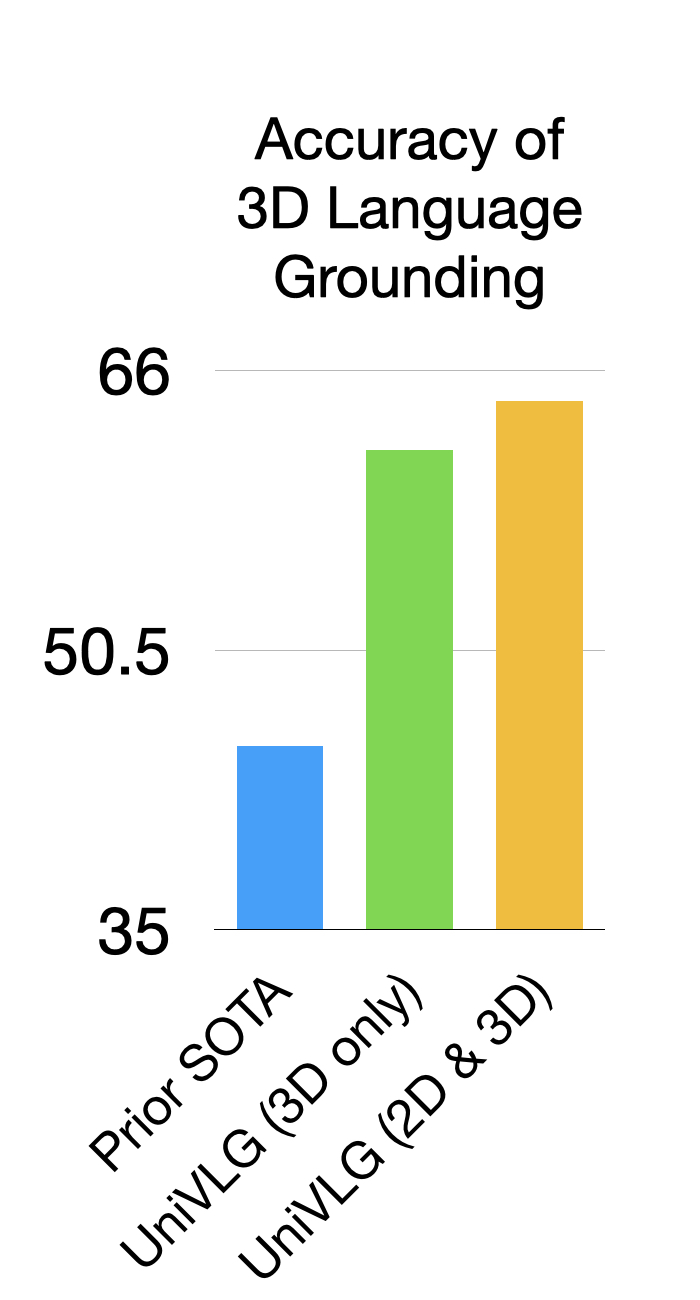

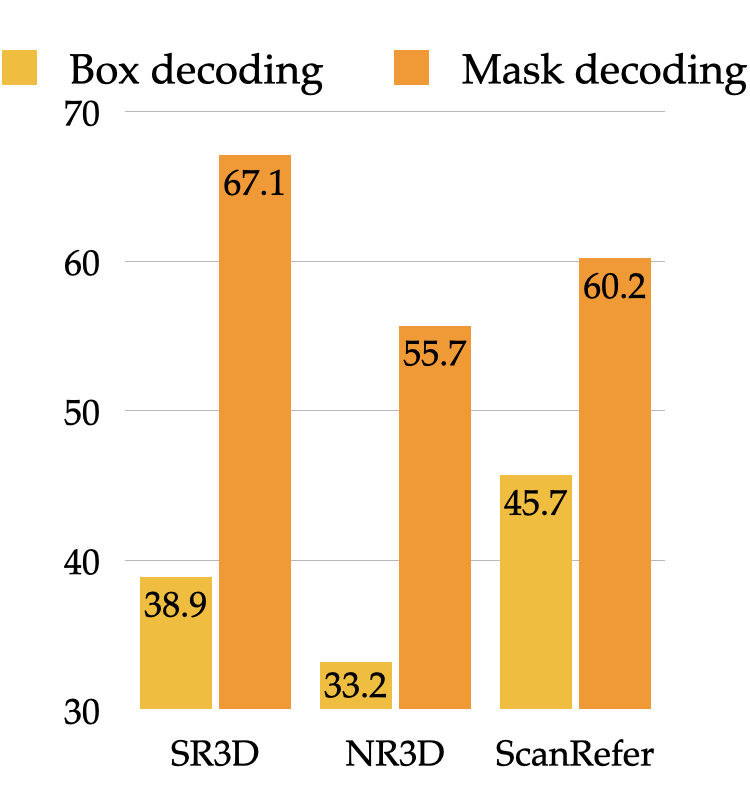

Progress in 3D vision-language learning has been hindered by the scarcity of large-scale 3D datasets. We introduce UniVLG, a unified architecture for 2D and 3D vision-language understanding that bridges the gap between existing 2D-centric models and the rich 3D sensory data available in embodied systems. Our approach initializes most model weights from pre-trained 2D models and trains on both 2D and 3D vision-language data. We propose a novel language-conditioned mask decoder shared across 2D and 3D modalities to ground objects effectively in both RGB and RGB-D images, outperforming box-based approaches. To further reduce the domain gap between 2D and 3D, we incorporate 2D-to-3D lifting strategies, enabling UniVLG to utilize 2D data to enhance 3D performance. With these innovations, our model achieves state-of-the-art performance across multiple 3D vision-language grounding tasks, demonstrating the potential of transferring advances from 2D vision-language learning to the data-constrained 3D domain. Furthermore, co-training on both 2D and 3D data enhances performance across modalities without sacrificing 2D capabilities. By removing the reliance on 3D mesh reconstruction and ground-truth object proposals, UniVLG sets a new standard for realistic, embodied-aligned evaluation.

UniVLG Architecture

UniVLG is a vision language transformer that accepts a language utterance and either (1) a sequence of posed RGB-D images or (2) a monocular RGB image, lifted to 3D (2D to 3D Projection). UniVLG fuses information across vision and language to predict 3D object segments or generate answers. It uses a ViT backbone followed by 3D relative attentions to produce a set of 3D feature tokens. The proposed decoder then iteratively updates a set of learnable queries as well as the 3D feature tokens though token - language - query attentions to decode object segments and match them to noun phrases in the input referential utterance. Masks are decoded through a dot-product between 3D feature tokens and learnable queries. A text decoder predicts answers for the input questions by conditioning on the set of updated object queries.